Efi Chalikopoulou for Vox

Efi Chalikopoulou for Vox

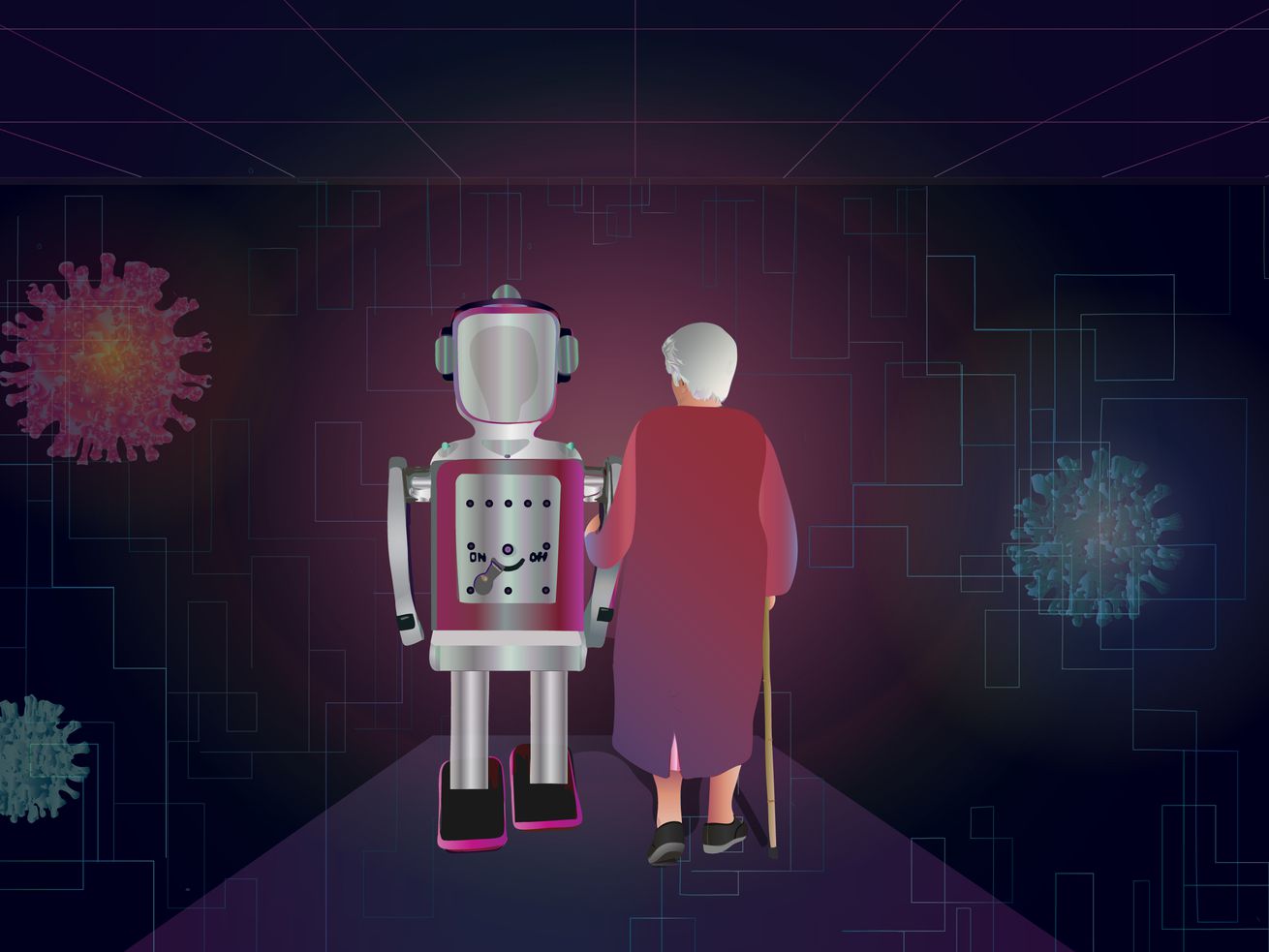

The ethical costs and benefits of a companion robot — during the pandemic and beyond.

Mabel LeRuzic, age 90, lives alone — but not really.

“He’s my baby,” she tells me over Zoom, holding up a puppy to the camera. “Huh, Lucky? Yes! Say hello!”

Lucky barks at me.

I laugh and say, “Who’s a good robot?”

Lucky barks again, and the sound is convincing, as if it’s coming from a real dog. He’s got a tail that wags, eyes that open and close, and a head that turns to face you when you talk. Under his synthetic golden fur, he has sensors that respond to your touch and a heartbeat you can feel.

LeRuzic, who lives in a rural area outside Albany, is fully aware that her pet is a robot. But ever since she got him in March, he’s made her feel less lonely, she says. She enjoys watching TV with him, brushing his fur with a little hairbrush, and tucking him in each night in a bed she’s made out of a box and towel.

She hugs him and coos into his floppy ear, “I love you! Yes, I do!”

She’s not the only one embracing robots these days.

Even before Covid-19 came around, robots like these were being introduced in nursing homes and other settings where lonely people are in need of companionship — especially in aging societies like Japan, Denmark, and Italy. Now, the pandemic has provided the ultimate use case for them.

This spring, more than 1,100 seniors, including LeRuzic, received robotic pets through the Association on Aging in New York, an advocacy organization. Another 375 people received them through the Florida Department of Elder Affairs. Retirement communities and senior services departments in Alabama, Pennsylvania, and several other states have begun buying robots for older adults.

/cdn.vox-cdn.com/uploads/chorus_asset/file/21864789/GettyImages_636182984.jpg) BSIP/Universal Images Group via Getty Images

BSIP/Universal Images Group via Getty Images

Robots designed to play social roles come in many forms. Some seem like little more than advanced mechanical toys, but they have the added capacity to sense their environment and respond to it. Many of these mimic cute animals — dogs and cats are especially popular — that issue comforting little barks and meows. Other robots have more humanoid features and talk to you like a person would. ElliQ will greet you with a friendly “Hi, it’s a pleasure to meet you” and tell you jokes; SanTO will read to you from the Bible and bless you; Pepper will play music and have a full-on dance party with you.

Companies have also designed robots to help with the physical tasks of caregiving. You can get Secom’s My Spoon robot to feed you, Sanyo’s electric bathtub robot to wash you, and Riken’s RIBA robot to lift you out of bed and into a chair. These robots have been around for years, and they work surprisingly well.

There’s a decent body of research suggesting that interacting with social robots can improve people’s well-being, although the effects vary depending on the individual person, their cultural context, and the type of robot.

The most well-studied robot, Paro, comes in the form of a baby harp seal. It’s adorable, but the US has recognized it as more than just that, classifying it as a medical device. It coos and moves, and its built-in sensors enable it to recognize certain words and feel how it’s being touched — whether it’s being stroked or hit, say. It learns to behave in the way the user prefers, remembering the actions that earned it a stroke and trying to repeat those. In older adults, notably those with dementia, Paro can reduce loneliness, depression, agitation, blood pressure, and even the need for some medications.

Social robots come with other benefits. Unlike human caregivers, robotic ones never get impatient or frustrated. They’ll never forget a pill or a doctor’s appointment. And they won’t abuse or defraud anyone, which is a real problem among people who care for elders, including family members.

/cdn.vox-cdn.com/uploads/chorus_asset/file/21864825/GettyImages_543742084.jpg) Yamaguchi Haruyoshi/Corbis via Getty Images

Yamaguchi Haruyoshi/Corbis via Getty Images

During the pandemic, when we’re all forced to socially distance from other human beings, robots come with another major advantage: They can roll right up to seniors and keep them company without any risk of giving them the coronavirus. It’s no wonder they’re being touted as a fix for the isolation of older people and others who are at high risk of severe Covid-19.

But the rise of social robots has also brought with it some thorny questions. Some bioethicists are all in favor of them — like Nancy Jecker at the University of Washington, who published a paper in July arguing for increased robot use during and after the pandemic on the grounds that they can alleviate loneliness, itself an epidemic that’s seriously harmful to human health.

Others are not so sure. Although there’s a strong case to be made for using robots in a pandemic, the rise of robot caregiving during the coronavirus crisis raises the possibility of robots becoming the new normal even in non-pandemic times. Many robots are already commercially available, and some are cheap enough that middle-class consumers can easily snag them online (LeRuzic’s dog costs $130, for example). Although social robots are not yet as widely used in the US as they are in Japan, we’ve all been inching toward a future where they’re ubiquitous, and the pandemic has accelerated that timeline.

That has some people worried. “We know that we already underinvest in human care,” Shannon Vallor, a philosopher of technology at the University of Edinburgh, told me. “We have very good reasons, in the pandemic context, to prefer a robot option. The problem is, what happens when the pandemic threat has abated? We might get in this mindset where we’ve normalized the substitution of human care with machine care. And I do worry about that.”

That substitution brings up a whole host of moral risks, which have to do with violating seniors’ dignity, privacy, freedom, and much more.

But, as Vallor pointed out, “If someone wants to be given the answer to ‘Are social robots good for us?’ — that question is at the wrong level of granularity. The question should be ‘When are robots good for us? And how can they be bad for us?’”

The ethics of care in a future with robots

Replacing or supplementing human caregivers with robots could be detrimental to the person being cared for. There are a few different ways that could happen.

For one thing, human contact is already in danger of becoming a luxury good as we create robots to more cheaply do the work of people. Getting robots to take on more and more caregiving duties could mean reducing seniors’ level of human contact even further.

“It might be convenient to have an automated spoon feeding a frail elderly person, but this would remove an opportunity for detailed and caring human interaction,” the robot ethics experts Amanda Sharkey and Noel Sharkey noted in their paper “Granny and the Robots.”

As companies urge us to let their robots care for our parents and grandparents, we might feel like we don’t need to visit them as much, figuring they’ve already got the company they need. That would be a mistake. For many older adults, interacting with a robot would feel less emotionally satisfying than interacting with a person because of the sense that whatever the robot says or does is not “authentic,” not based on real thoughts and feelings.

But for those who have no one or very few people to interact with, contact with a robot is probably better than no contact at all. And if we use them wisely, robots can improve quality of life. Take LeRuzic and her dog. When I asked if her grandchildren visit less often now that they know she’s got a robot, she said no. In fact, Lucky has given her and her granddaughter Brandie an extra way to connect, because Brandie also got a new puppy (a real one) in March. “We’ve found a lot in common,” she told me. “Except one of us doesn’t have to deal with vet bills!”

Some particularly well-designed robots, like Paro, have also been shown to increase human-to-human interaction among nursing home residents and between seniors and their kids. It gives them something positive to focus on and talk about together.

Another common worry is that robots may violate human dignity because it can be demeaning and objectifying to have a machine wash or move you, as if you’re a lump of dead matter. But Filippo Santoni de Sio, a tech ethics professor at Delft University of Technology in the Netherlands, emphasized that individual tastes differ.

“It depends,” he told me. “For some people, it’s more dignifying to be assisted by a machine that does not understand what’s going on. Some may not like anyone to see them naked or assist them with washing.”

Then there are concerns about violating the privacy and personal liberty of seniors. Some robots marketed for elder care come with built-in cameras that essentially allow people to spy on their parents or grandparents, or nurses to surveil their charges. As early as 2002, robots designed to look like teddy bears were being used in Japanese retirement homes, where they’d watch over residents and alert staff whenever someone left their bed.

You might argue this is for the seniors’ own good because it will prevent them from getting hurt. But such constant monitoring seems ethically problematic, especially when you consider that the senior may forget the robot in their room is watching — and reporting on — their every move.

There may be ways to solve these problems, though, if roboticists design with an eye to safeguarding privacy and freedom. For example, they can program a robot so it needs to get the senior’s permission before entering a room or before lifting them out of bed.

The difference between liberation from care and liberation to care

There’s also another factor to wonder — and worry — about: Can getting robots to do the work of caregiving also be detrimental to the would-be caregivers?

Vallor lays out the case for this claim in an important 2011 paper, “Carebots and Caregivers.” She argues that the experience of caregiving helps build our moral character, allowing us to cultivate virtues like empathy, patience, and understanding. So outsourcing that work wouldn’t just mean abdicating our duty to nurture others; it would also mean cheating ourselves out of a valuable opportunity to grow.

“If the availability of robot care seduces us into abandoning caregiving practices before we have had sufficient opportunities to cultivate the virtues of empathy and reciprocity, among others,” Vallor writes, “the impact upon our moral character, and society, could be quite devastating.”

She’s careful to note, though, that caring for someone else doesn’t automatically make you into a better person. If you don’t have enough resources and support at your disposal, you can end up burned out, bitter, and possibly less empathetic than you were before.

So Vallor continues: “On the other hand, if carebots provide forms of limited support that draw us further into caregiving practices, able to feel more and give more, freed from the fear that we will be crushed by unbearable burdens, then the moral effect on the character of caregivers could be remarkably positive.”

Again, robots aren’t inherently good or bad; it depends on how you use them. If you generally feel good about caring for a senior except for a couple of tasks that are too physically or emotionally difficult — say, lifting him up and taking him to the bathroom — then having a robot to help you with those specific tasks might actually make it easier for you to care more, and care better, the rest of the time.

As Vallor says, there’s a big difference between liberation from care and liberation to care. We don’t want the former because caregiving can actually help us grow as moral beings. But we do want the latter, and if a robot gives us that by making caregiving more sustainable, that’s a win.

What if people come to prefer robots over other people?

There’s another concern we haven’t considered yet: A robot might provide company that the senior finds not inferior, but actually superior, to human company. After all, a robot has no wants or needs of its own. It doesn’t judge. It’s infinitely forgiving.

You can glimpse this sentiment in the words of Deanna Dezern, an 80-year-old woman in Florida living with an ElliQ robot. “I’m in quarantine with my best friend,” she said. “She won’t have her feelings hurt and she doesn’t get moody, and she puts up with my moods, and that’s the best friend anybody can have.”

Dezern might be happy with this arrangement (at least so long as the pandemic lasts), and the preferences of seniors themselves are obviously crucial. But some philosophers have raised concerns about whether this setup risks degrading our humanity over the long term. The prospect of people coming to prefer robots over fellow people is problematic if you think human-to-human connection is an essential part of what it means to live a flourishing life, not least because others’ needs and moods are part of what makes life meaningful.

/cdn.vox-cdn.com/uploads/chorus_asset/file/21864874/GettyImages_501921914.jpg) Laura Lezza/Getty Images

Laura Lezza/Getty Images

“If we had technologies that drew us into a bubble of self-absorption in which we drew further and further away from one another, I don’t think that’s something we can regard as good, even if that’s what people choose,” Vallor says. “Because you then have a world in which people no longer have any desire to care for one another. And I think the ability to live a caring life is pretty close to a universal good. Caring is part of how you grow as a human.”

Yes, individual autonomy is important. But not everything an individual chooses is necessarily what’s good for them.

“In society, we always have to recognize the danger of being overly paternalistic and saying, ‘You don’t know what’s good for you so we’ll choose for you,’ but we also have to avoid the other extreme, the naive libertarian view that suggests that the way you run a flourishing society is leaving everything up to individual whims,” Vallor says. “We need to find that intelligent balance in the middle where we give people a range of ways to live well.”

Santoni de Sio, for his part, says that if a senior has the ability to choose freely, and chooses to spend time with robots instead of people, that’s a legitimate choice. But the choice has to be authentically free — not just the result of market forces (like tech companies pushing us to adopt addictively entertaining robots) or other economic and social pressures.

“We should not buy a simplistic and superficial understanding of what it means to have free choice or to be in control of our lives,” he says. “There’s this narrative that says technology is enhancing our freedom because it’s giving us choices. But is this real freedom? Or a shallow version of it that hides the closing of opportunities? The big philosophical task we have in front of us is redefining freedom and control in the age of Big Tech.”

So, bottom line: Should you buy your grandma a robot?

If you take anything away from this discussion, take away the fact that there’s no single answer to this question. The more productive question is: Under what specific conditions would a robot enhance care, and under what conditions would it degrade care?

During a pandemic, there’s a strong case to be made for using social robots. The benefits they can provide in terms of alleviating loneliness seem to outweigh the risks.

But if we fully embrace robots now, deeming them a fine substitute during a pandemic, how will we make sure they’re not used to disguise ethical gaps in our behavior post-pandemic?

Several tech ethicists say we need to set robust standards for care in the environments where we’re morally obligated to provide it. Just as nursing homes have standards around physical safety and cleanliness, maybe they should have legal restrictions on how long seniors can be left without human contact, with only robots to care for them.

Vallor imagines a future where an inspector reviews facilities on an annual basis and has the power to pull their certification if they’re not providing the requisite level of human contact. “Then, even after the pandemic, we could say, ‘We see that this facility has just carried on with roboticized, automated care when there’s no longer a public health necessity for that, and this falls short of the standards,’” she told me.

But the idea of developing standards around robot care leads to the question: How do we determine the right standards?

Santoni de Sio lays out a framework called the “nature-of-activities approach” to help answer this. He distinguishes between a goal-oriented activity, where the activity is a means to achieving some external aim, and a practice-oriented activity, where the performance of the activity is itself the aim. In reality, an activity is always a mix of the two, but usually one element predominates. Commuting to work is mostly goal-oriented; watching a play is mostly practice-oriented.

In the caregiving context, most people would say that reminding an elderly person to take their medication or collecting a urine sample for testing is mostly goal-oriented, so for that type of activity, it’s okay to substitute a robot for a nurse. By contrast, watching a movie with the senior or listening to their stories is mostly practice-oriented. You doing the activity with them — your very presence — is the point. So it matters that you, the human being, be there.

/cdn.vox-cdn.com/uploads/chorus_asset/file/21864893/GettyImages_1066094076.jpg) Thomas Lohnes/Getty Images

Thomas Lohnes/Getty Images

There’s some intuitive appeal to this division — certain discrete tasks go to the robot, while the broader process of showing up emotionally to listen, laugh, and cry remains our human responsibility. And it echoes a claim we often hear about artificial intelligence and the future of work: that we’ll just automate the dull and repetitive tasks but leave the work that calls on our highest cognitive and emotional faculties.

Vallor says that sounds good on the surface — until you look at what it actually does to people. “You’re making them turn their cognitive and emotional faculties up to 11 for many hours instead of having those moments of decompression where they do something mindless to recharge,” she says. “You cannot divide up the world such that they are performing intense emotional and relational labor for periods that the human body is just not capable of sustaining, while you have the robots do all the things that sometimes humans do to get a break.”

This point suggests we can’t rely on any one conceptual distinction to take the nuance out of the problem. Instead, when deciding which aspects of human connection can be automated and which cannot, we should ask ourselves several questions. Is it goal-oriented or practice-oriented? Is it liberating us from care or liberating us to care? And who benefits — truly, authentically benefits — from bringing robots into the social and caregiving realm?

Help keep Vox free for all

Millions turn to Vox each month to understand what’s happening in the news, from the coronavirus crisis to a racial reckoning to what is, quite possibly, the most consequential presidential election of our lifetimes. Our mission has never been more vital than it is in this moment: to empower you through understanding. But our distinctive brand of explanatory journalism takes resources — particularly during a pandemic and an economic downturn. Even when the economy and the news advertising market recovers, your support will be a critical part of sustaining our resource-intensive work, and helping everyone make sense of an increasingly chaotic world. Contribute today from as little as $3.

from Vox - All https://ift.tt/2Zk2sHh